The Challenge of Developing Robot Morality

Imagine living in a world filled with robots. Not just robots that assemble cars in a faraway factory, or that vacuum your floors in the form of small, automated discs. Imagine a world in which robots walk, talk, and interact with you on a daily basis.

While we may not live in this Star Wars-like reality yet, we are getting closer by the day. According to scientists interviewed about the developing world of robotics, “It’s a shorter leap than you might think, technically, from a Roomba vacuum cleaner to a robot that acts as an autonomous home-health aide.” So if the technology is almost there, a new set of challenges emerges. The question switches from “can we,” to “how can we?”

Let’s say robots do begin to function as caretakers and visiting nurses. We may see, in our lifetimes even, a world where home care is affordable and efficient. In this world, robots walk, roll or glide across the home, dispensing medication and checking vital signs for the elderly and infirm.

Now imagine this scenario: an elderly woman has a robot caretaker. She slips and falls, but on the way down grabs a chair for support, which then falls on top of her. She is pinned down and in great pain, as the falling chair has broken one of her ribs. She cries for help. Her robot caretaker comes to her aide. This robot is programmed for two things: to not harm its human, and to follow all instructions it receives. The owner pleads with the robot to lift the chair off her ribcage. As the robot starts to do so, she cries out even louder. Moving the chair is causing her pain, but she insists that the robot move it. The two parts of its programming are at odds. What does the robot do?

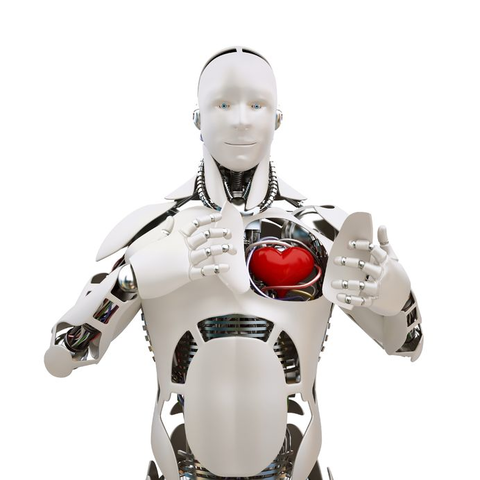

As we start to look at using robots for tasks more complex than welding and floor cleaning, these are the sorts of questions that arise. If a robot is ever to fill a role as complex as health care, a sense of morality is needed. Some means and ability to make a decision when there are divergent circumstances. A human aide could weigh the options of temporarily hurting the woman by moving the chair, and the extended benefit of freeing her from imprisonment on her living room floor. Humans have the ability to consider options and make critical decisions, even when different interests are in conflict. Robots cannot, or at least, not yet.

Currently, scientists are teaming up with “philosophers, psychologists, linguists, lawyers, theologians and human rights experts” to identify a set of “decision points” that robots would then need to work through in order to develop some code of conduct mimicking morality.

Do you think morality can be emulated by an algorithm, no matter how complex it is, and how many factors it takes into account? Some scientists argue that not only is it possible, but that many years down the line, robot morality will actually be more consistent than that of humans.. Is there comfort in the idea that robot morality (if it can be developed) would be fixed and unwavering, or is true morality a unique attribute for humans – who are able to make decisions based more on a feeling than a fact.

In a world where the last 60 years of robotic development will likely be eclipsed by the next 6, these are no longer concerns relegated to science fiction. Developing more complex robots, and rules to govern their behavior, is the next great challenge for our society. Discuss your beliefs and concerns together, as we face a unique set of challenges that will develop not only the new age of robotics, but of human conduct as well.

1 Comment